Abstract

This study investigates the integration of an AI-driven support agent within a Moodle based English for Academic Purposes (EAP) course to address the compounded challenges faced by repeating students, including deficits in self-directed learning, engagement, and motivation. Leveraging prompt-engineered large language models (LLMs), the AI agent delivered personalized guidance and SMART goal-setting support tailored to individual learner needs. A case study involving six students at Xi’an Jiaotong-Liverpool University (XJTLU) demonstrated significant improvements in goal-setting frequency, metacognitive strategies, and autonomous study engagement. Post-intervention results revealed a 175% increase in self-directed learning time and unanimous adoption of the AI tool for academic self-assessment. The findings underscore the potential of AI-driven interventions to foster self-regulated learning and academic resilience, offering actionable insights for educators and developers to bridge pedagogical gaps for at-risk student populations.

Key words: AI-driven,English for Academic Purposes(EAP),Self-Directed Learning

Introduction

English for Academic Purposes (EAP) courses are critical for equipping students with the linguistic and analytical skills necessary for success in higher education. However, students repeating these courses due to prior academic challenges often face compounded difficulties, including deficits in self-directed learning, low engagement, and diminished motivation. Traditional pedagogical interventions frequently fail to address these students’ individualized needs, particularly in fostering autonomy and sustained participation.

This study explores the integration of an AI-driven support agent within a Moodle-based EAP045 course at Xi'an Jiaotong-Liverpool University (XJTLU). The AI agent, designed to promote self-directed learning and deliver personalized guidance, dynamically adapts to repeating students’ academic needs. While prior research has examined AI’s broader educational implications, this study focuses on the practical implementation of such systems, emphasizing the training processes required to align AI outputs with pedagogical objectives. By detailing technical and pedagogical considerations, the study contributes actionable insights for educators and developers seeking to leverage AI for targeted academic support.

Literature Review

AI in Educational Contexts

The integration of Artificial Intelligence in education (AIEd) has been a focal point of research for nearly three decades, driven by its potential to revolutionize personalized learning and instructional efficiency. A growing body of evidence underscores AI’s capacity to enhance educational outcomes through adaptive, data-driven interventions. In language education, for instance, AI-mediated systems have demonstrated significant promise in improving learning achievement, motivation, and self-regulated learning behaviors. Wei et al. (2022) highlight that AI tools can analyze learner data to generate individualized feedback and dynamically adjust instructional content, thereby fostering engagement and autonomy among students. Such systems are particularly effective in addressing the heterogeneous needs of learners, a critical advantage in foundational courses such as English for Academic Purposes (EAP), where students often enter with varying proficiency levels and learning preferences.

The success of AIEd, however, hinges on its alignment with established pedagogical principles. Zawacki-Richter et al. (2019) emphasize that AI-driven interventions must be explicitly grounded in educational theory to avoid becoming mere technological novelties. Their comprehensive review identifies personalization and retrieval practice as two key evidence-based strategies that enhance learning efficacy when integrated into AI systems. Personalization, as articulated by Kirschner and Hendrick (2020), relies on the systematic collection and analysis of learner-specific data to tailor instruction. In the context of this study, personalization is operationalized through reflective writing tasks: students submit 200-word reflections on their language learning challenges and aspirations, which are then processed by an AI agent to generate personalized SMART goals. These goals guide learners toward targeted activities aligned with their unique needs, transforming abstract aspirations into actionable steps.

Retrieval practice, another cornerstone of effective pedagogy, further strengthens this framework. Defined as the active recall of information from memory—rather than passive review—retrieval practice enhances long-term retention, deepens conceptual understanding, and promotes transfer of knowledge to novel contexts (Karpicke & Aue, 2015; Pan & Rickard, 2018). Empirical studies across disciplines, from language acquisition to STEM education, confirm its superiority over traditional study methods (Roediger & Butler, 2011). In this project, retrieval practice is embedded within the AI agent’s task recommendations. For example, the system may prompt students to reconstruct key vocabulary from memory during follow-up exercises or apply previously learned grammatical structures in new writing tasks. By interweaving retrieval practice with personalized goal-setting, the AI agent ensures that learners not only address immediate challenges but also reinforce foundational knowledge through active engagement.

Reinforcement Learning vs. Large Language Models

Two dominant paradigms govern AI system design: Reinforcement Learning (RL) and Large Language Models (LLMs). RL, which trains agents to optimize decisions through trial-and-error interactions, excels in dynamic, sequential environments such as game-based learning or robotics (Ghamati et. al.,2024). Conversely, LLMs specialize in natural language understanding and generation, enabling nuanced communication and context-aware responses (Zaraki et al., 2020). For educational contexts requiring dialogue-based mentorship and personalized feedback, LLMs present distinct advantages due to their linguistic versatility and ability to process unstructured input.

However, LLMs also has its limitations. Challenge arises when agents trained on static datasets fail to adapt to new circumstances, as the use of an offline (static) dataset trains model parameters on a snapshot of the data distribution, which may be misaligned with evolving real-world conditions (Rannen-Triki et. al., 2024). To address this problem, we need to constant review leaners' input of learning reflections, modify the prompt and dataset accordingly to make sure the AI agent can provide relevant goals regarding their current learning levels.

LLM Personalization via Prompt Optimization

To tailor LLMs to specific educational tasks, prompt optimization has emerged as a key technique. This approach refines pre-trained models like GPT-3.5 through structured prompts that align outputs with user needs (Brown et. al., 2020). Ekin's (2023) foundational work on prompt engineering outlines principles critical to this process, emphasizing clarity, contextual relevance, and iterative refinement. The current study adopts five of Ekin's (2023) strategies:

1. Clear and Specific Instructions: Prompts explicitly define the AI’s role (e.g., “Act as an academic writing mentor”) and prioritize actionable feedback over generic advice.

2. Explicit Constraints: Outputs are bound by format (e.g., bullet-pointed goals), length (e.g., 3–5 objectives), and scope (e.g., focus on grammar or thesis development).

3. Context and Examples: Prompts incorporate anonymized student reflections and exemplar responses to ground outputs in real learner experiences.

4. Leveraging System 1 and System 2 Questions: The AI balances intuitive, rapid-response prompts (System 1—e.g., “Identify two grammar errors in this sentence”) with analytical, reflection-driven tasks (System 2—e.g., “Propose a revision strategy for weak thesis statements”).

5. Controlling Output Verbosity: Responses are calibrated to learner proficiency, avoiding overwhelming novices with excessive detail while providing sufficient depth for advanced students.

By synthesizing Ekin's (2023) framework with the unique demands of EAP045 learners, this study demonstrates how prompt engineering can transform generic LLMs into tailored academic mentors. The subsequent sections detail the AI agent's development, training processes, and integration within the Moodle platform, offering a blueprint for replicability in similar educational contexts.

Methodology

Research Design

This study adopts a case study approach, focusing on the integration of an AI-driven support agent within the EAP045 course. The research design emphasizes the practical implementation of AI in education, particularly the training processes required to align the AI agent with learners' needs. The study consists of two main phases: (1) training the AI agent and (2) conducting a pilot study with students. The training phase involves designing prompts and knowledge bases, testing and refining prompts, and ensuring the AI agent can effectively respond to specific student questions. The pilot study phase involves inviting students to use the AI agent, collecting user experience data, and analyzing the effectiveness of the AI agent in promoting self-directed learning.

Training the AI Agent

Based on Ekin's (2023) framework and the unique demands of EAP045 learners, researchers designed prompts to guide the AI agent in providing personalized academic support. The training process of the AI agent is guided by prompt engineering principles, which stress the importance of clear instructions, explicit constraints, and contextual examples. The following details the comprehensive steps taken to train the AI agent:

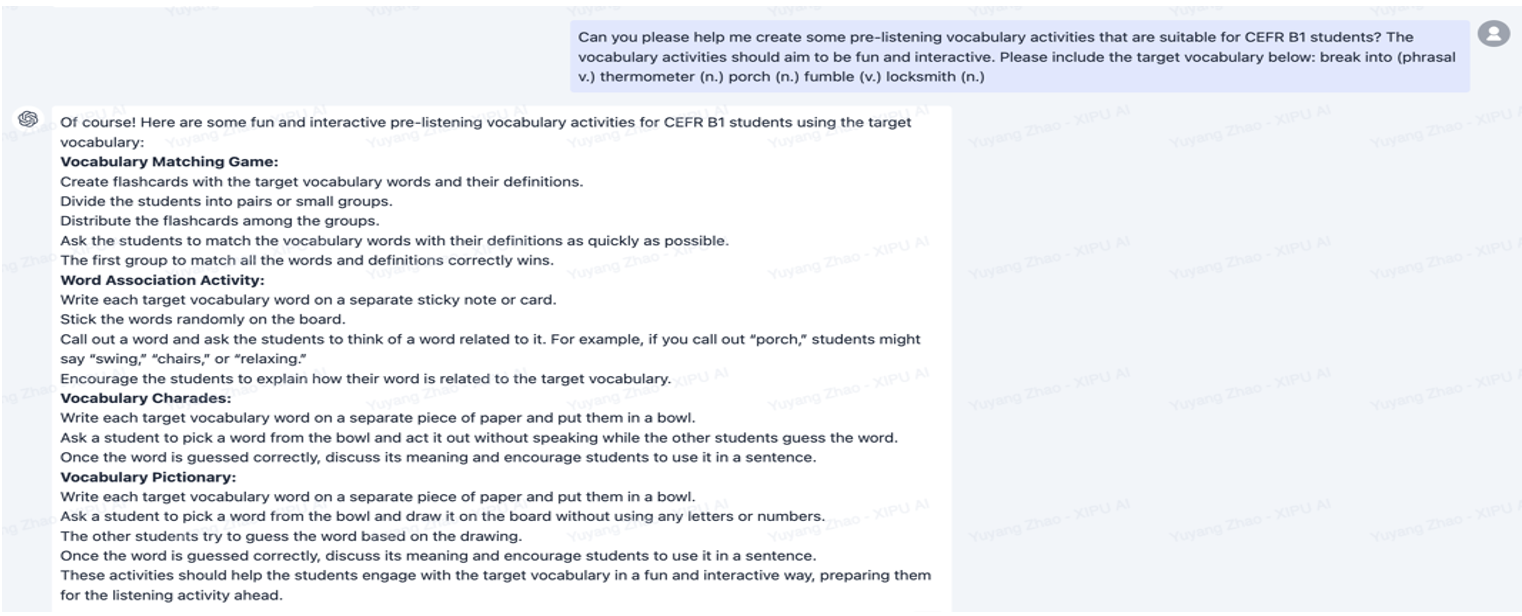

Step 1: Knowledge Base Integration

The training commenced with the integration of a comprehensive knowledge base. This involved curating and uploading course-specific materials, such as the XJTLU EAP Reading Booklet, lesson plans, and online materials, along with pedagogical theories including retrieval practice and self-regulated learning strategies (see image 1). To ensure the AI agent could effectively ground its suggestions within the course content, resources were meticulously tagged with metadata, such as keywords, topics, and difficulty levels. Furthermore, each resource was mapped to specific learning objectives. For instance, Unit 6, which focuses on skimming techniques, was linked to the course goal of enhancing reading comprehension, while Week 7's lesson on thesis development was tied to academic writing proficiency. This contextual grounding ensures the AI agent's recommendations are not only relevant but also aligned with the course's academic aims.

Image 1

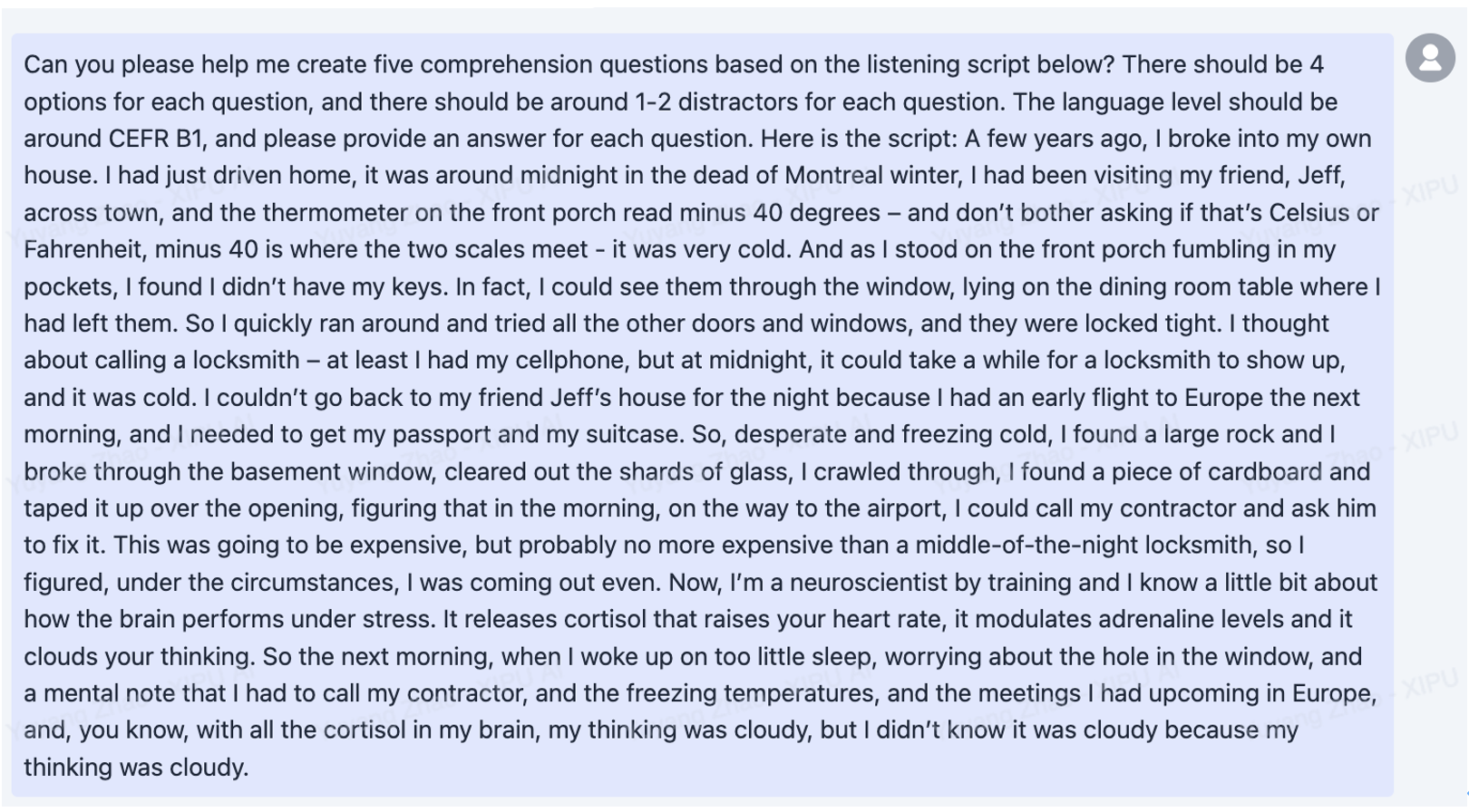

Step 2: Prompt Design for Personalized Feedback

Subsequently, prompts were designed to facilitate personalized feedback. These prompts were crafted to embody the role of an academic mentor, such as “Act as an academic mentor. Analyze the student’s reflection below. Identify ONE key challenge and propose a SMART goal using resources from the knowledge base” (see image 2). To maintain clarity and relevance, explicit constraints were incorporated. The AI agent was instructed to format its responses as bullet-pointed goals, ranging from 3 to 5 items, and to tie suggestions to specific course materials. For example, prompts included dynamic references such as “For [CHALLENGE], recommend [ACTIVITY] from [UNIT/WEEK] and link it to [STRATEGY]” (see image 3) Additionally, rule-based triggers were established. If a student's reflection mentioned “grammar errors,” the AI agent would reference Week 5's Grammar Workshops. Similarly, if “slow reading” was noted, the agent would suggest Unit 6's Skimming Techniques. This structured approach ensures the AI agent's feedback is both actionable and contextually appropriate.

Image 2

Image 3

Step 3: Example-Driven Training

The training process was further enriched through example-driven training. Annotated student reflections and corresponding model responses were integrated into the prompts. For instance, a student input stating, “I struggle to identify main ideas in academic texts,” would elicit an AI response: “Try the skimming exercises in Unit 6, focusing on topic sentences. Pair this with the ‘Keyword Highlighting’ strategy from Week 3.” This method enables the AI agent to learn from concrete examples, thereby enhancing the accuracy and relevance of its responses (see image 4).

Image 4

Step 4: Testing and Iteration

After the initial setup, rigorous testing and iteration followed. Sample student reflections were simulated to evaluate the AI agent's performance. For example, when presented with the input “I need to improve my academic reading skills. Can you help me set a weekly goal?” the AI agent would generate a response: “Absolutely! Based on the Reading Booklet, a SMART goal could be: 'I will practice skimming and scanning techniques for 30 minutes daily using Unit 1 materials, aiming to increase my reading speed and comprehension. How does this sound? Would you like to adjust any part of this goal?” (see image 5). Researchers meticulously assessed the clarity, relevance, and effectiveness of these responses. Based on the findings, prompts were iteratively refined. Language was simplified for better comprehension while preserving specificity and measurability. For example, a prompt was adjusted to “Based on Unit 6 of the Reading Booklet, a good goal is: 'I will practice skimming techniques for 20 minutes daily, focusing on identifying main ideas. I aim to improve my comprehension of academic texts by the end of the week.” This refinement ensured the AI agent's guidance resonated with the students' actual learning needs. Moreover, feedback from the AI agent's responses was incorporated to further optimize the prompts, enabling the AI agent to provide more precise and personalized support (see image 6 and 7).

Image 5

Image 6

Image 7

Step 5: Continuous Adaptation

Finally, provisions for continuous adaptation were established. The knowledge base was designed to be updated with new resources, such as vocabulary lists, and prompts were retrained to reflect these changes. Student feedback was leveraged to reinforce effective contextual links. For example, if a student responded positively to a Unit 6 tip, the AI agent would continue to reference this unit in future interactions (see image 8 and 9). This ongoing adaptation ensures the AI agent remains responsive to the evolving learning needs of the students.

Image 8

Image 9

Pilot Study

After completing the training of the AI agent, a pilot study was conducted to evaluate its effectiveness in promoting self-directed learning among students. The pilot study involved the following steps:

Participant Recruitment

Selection Criteria: Researchers invited six Year 1 students enrolled in the EAP045 course at XJTLU to participate in the pilot study. Participants were selected based on their academic performance and self-reported learning needs to ensure diversity in the sample and enhance the study’s representativeness.

Recruitment Process: Researchers communicated with potential participants via email, introducing the purpose and process of the study. Interested students were asked to complete a brief survey to confirm their willingness to participate and provide basic information about their learning background and challenges. Ultimately, seven students were selected to participate in the pilot study.

Agent Usage and Interaction

Usage Instructions: Researchers provided participants with detailed instructions on how to use the AI agent, including accessing the agent through the Moodle platform, submitting questions, and interpreting the agent’s responses. Participants were encouraged to use the AI agent to set weekly academic goals, reflect on their goal achievements, and adjust their study plans as needed.

Interaction Process: Over the two-week pilot study period, participants interacted with the AI agent on a daily basis. For example, a participant might input, "I didn’t meet my reading goal last week. What should I do?" The AI agent would respond, "It’s okay that you didn’t meet your goal. Let’s adjust it together. Based on your progress, a revised SMART goal could be: 'I will practice skimming for 20 minutes daily and focus on identifying main ideas, aiming to improve my comprehension by a noticeable amount by next week.' I believe in you! How do you feel about this new goal?" Through such interactions, the AI agent provided participants with personalized guidance and support, helping them enhance their self-directed learning abilities.

Reflective Meetings

Meeting Arrangement: At the end of the two-week pilot study, researchers organized a group reflective meeting with participants, in which students shared their experiences and feedback on using the AI agent.

Discussion Topics: During the reflective meetings, researchers guided participants to reflect on their interactions with the AI agent, focusing on topics such as the agent’s effectiveness in helping them set and achieve academic goals, the quality of the guidance provided, and the agent’s impact on their study habits. Researchers recorded participants’ feedback to gain insights into the AI agent’s strengths and areas for improvement (see image 10).

Image 10

Data Collection

To comprehensively evaluate the effectiveness of the AI agent, researchers collected data on user experience through a pre- and post-survey. The survey contained 10 items covering aspects such as the AI agent’s usability, the relevance and usefulness of its responses, and its impact on students’ self-directed learning abilities. The questionnaire adopted a Likert scale ranging from 1 (Strongly Disagree) to 5 (Strongly Agree), enabling participants to quantitatively assess their satisfaction with the AI agent.

Data Analysis

The collected data underwent quantitative analysis. Researchers calculated descriptive statistics such as means and standard deviations to assess participants’ overall satisfaction with the AI agent. Item analyses were conducted to identify specific aspects of the AI agent that performed well or required improvement.

Data & Discussion

For this case study, 6 participants from EAP045 were recruited to receive the intervention that involved the AI-powered learning platform. A pre- and post-survey was administered to understand students’ perceptions to their self-directed learning behaviors in terms of metacognitive strategies, time management and control, and self-learning actions. Prior to the intervention, a majority of participants (n=4) reported that they rarely set specific learning goals, with only 2 participants who indicated occasional goal-setting practices. This is an important academic practice for students to engage in, especially those who need to increase their academic performance as repeating students. The act of goal-setting has been well researched in its effectiveness to benefit students in a range of academic areas such as reading, writing, and foreign language study (Schunk & Rice, 1989;1991; Schunk & Swartz, 1993; Moeller, Theiler, & Wu, 2012). Not only does it support academic subjects but it has also been shown to support self-regulated learning boosting students’ intrinsic motivation (Ames & Archer, 1988; Pajares, Britner & Valiant, 2000; Murayama & Elliott, 2009).

Our findings in the post-survey show that the AI-embedded learning platform intervention resulted in a significant shift in students’ responses to goal-setting with the majority of students (n=5) identifying that their goal-setting behaviors are now more frequent, with only one participant maintaining occasional goal-setting practices. These results support the continued use of this intervention.

Another notable shift was observed in participants' self-reported frequency of evaluating their understanding of the topic before moving on. This refers to metacognitive strategies like reflecting and checking for understanding which requires students to be more aware of their learning progress. Prior to the intervention, all participants (n=6) indicated that they rarely engaged in this metacognitive practice. Post-intervention, a substantial improvement was evident: the majority of participants (n=4) reported frequent evaluation, with one participant indicating consistent engagement in this practice, and another reporting occasional evaluation. This change suggests a significant increase in metacognitive awareness and self-regulation among the study participants.

| |

Pre-survey Mean |

Post-survey Mean |

| Q2: How often do you evaluate your understanding of a topic before moving on? 1-rarely to 4-always |

1 (0.00 SD) |

3.5 (0.836 SD) |

To support students’ self-directed learning skills to monitor their own learning, an AI chatbot called “Smart Agent” was embedded into the learning platform. The Chatbot could help students summarize or breakdown materials as well as help them with goal-setting. According to the survey, all participants (n=6) reported utilizing the AI-powered chatbot to assess their comprehension of the course material. This unanimous adoption suggests a significant improvement in AI literacy among the study cohort. The integration of AI tools into students' learning processes indicates not only an increased comfort with such technologies but also a strategic approach to leveraging AI for academic self-assessment.

When it comes to task management and control, analysis of self-reported data revealed a substantial increase in participants' independent learning engagement. The mean time devoted to autonomous study activities rose from a baseline of 4 hours per week to 11 hours per week post-intervention. This represents a 175% increase in self-directed learning time, suggesting a significant enhancement in participants' commitment to independent study practices.

The last section of the survey involved self-learning action requiring students to response to statements on a Likert scale of 1(strongly disagree) to 5 (strongly agree). The statement "I will finish online learning materials and tasks," had a marked shift in participants' responses. Prior to the intervention, the majority of participants (n=5, 83.3%) selected "Disagree," while one participant (16.7%) chose "Neutral" (Mdn = 2, IQR = 0). Post-intervention, a substantial positive change was evident: four participants (66.7%) selected "Agree," and two (33.3%) chose "Strongly Agree" (Mdn = 4, IQR = 1). This shift represents a significant increase in participants' reported intention to complete online learning tasks, with 100% of respondents now expressing agreement or strong agreement with the statement.

| |

Pre-survey Mean |

Post-survey Mean |

| Q12: I will finish online learning materials and tasks. |

1.33 (0.816 SD) |

4.33 (0.516 SD) |

Based on our results, it is clear that the AI-powered learning platform is significantly beneficial for students in developing their self-directed learning behaviors. This is an intervention that could have the potential to support all EAP students. Considering the context of being a Sino-Foreign University many students face challenges to adapt to the academic environment at XJTLU. By employing these methods, we could make a significant impact on students’ academic success.

Conclusion

The implementation of an AI-driven support agent within an EAP045 course highlights the transformative potential of LLMs in addressing the unique challenges of repeating students. By synthesizing prompt engineering with pedagogical principles such as retrieval practice and personalized goal-setting, the AI agent significantly enhanced participants’ self-directed learning behaviors. Post-intervention data revealed substantial improvements in goal-setting consistency, metacognitive reflection, and independent study time, aligning with established theories on self-regulated learning and AI-mediated education. Despite the study’s limited sample size, the results provide a replicable framework for institutions seeking to support students in transitional academic environments. Future research should expand scalability testing, examine long-term behavioral retention, and explore cross-disciplinary applications of similar AI tools. This work contributes to the growing discourse on AI in education, emphasizing its role not as a replacement for human instruction, but as a complementary tool to empower learners and mitigate cycles of academic underperformance.

References

Ames, C., & Archer, J. (1988). Achievement goals in the classroom: Students' learning strategies and motivation processes. Journal of educational psychology, 80(3), 260.

Brown, T., Mann, B., Ryder, N., Subbiah, M., Kaplan, J. D., Dhariwal, P., ... & Amodei, D. (2020). Language models are few-shot learners. Advances in neural information processing systems, 33, 1877-1901.

Ekin, S. (2023). Prompt engineering for ChatGPT: a quick guide to techniques, tips, and best practices. Authorea Preprints.

Ghamati, K., Zaraki, A., & Amirabdollahian, F. (2024, November). ARI humanoid robot imitates human gaze behaviour using reinforcement learning in real-world environments. In 2024 IEEE-RAS 23rd International Conference on Humanoid Robots (Humanoids) (pp. 653-660). IEEE.

Karpicke, J., & Aue, W. (2015). The testing effect is alive and well with complex materials. Educational Psychology Review, 27(2), 317–326. https://doi.org/10.1007/s10648-015-9309-3

Kirschner, P.A. & Hendrick, C. (2020). How Learning Happens. New York Routeledge.

Moeller, A., Theiler, J., & Wu, C. (2012). Goal setting and student achievement: A longitudinal study. Modern Language Journal, 96(2), 153–169.

Murayama, K., & Elliot, A. (2009). The joint influence of personal achievement goals and classroom goal structures on achievement-relevant outcomes. Journal of Educational Psychology, 101(2), 432–447.

Pajares, F., Britner, S. L., & Valiant, G. (2000). Relation between achievement goals and self-beliefs in middle school students in writing and science. Contemporary Educational Psychology, 25(4), 406–422.

Pan, S., & Rickard, T. (2018). Transfer of test-enhanced learning: Meta-analytic review and synthesis. Psychological Bulletin, 144(7), 710–756. https://doi.org/10.1037/bul0000151

Rannen-Triki, A., Bornschein, J., Pascanu, R., Hutter, M., György, A., Galashov, A., ... & Titsias, M. K. (2024). Revisiting Dynamic Evaluation: Online Adaptation for Large Language Models. arXiv preprint arXiv:2403.01518.

Roediger, H.L. III and Butler, A.C., 2011. The critical role of retrieval practice in long-term retention. Trends in Cognitive Sciences, 15(1), pp.20–27. doi:10.1016/j.tics.2010.09.003.

Schunk, D. H., & Rice, J. M. (1989). Strategy fading and progress feedback: Effects on self-efficacy and comprehension among students receiving remedial reading services. Journal of Special Education, 27, 257–276.

Schunk, D. H., & Rice, J. M. (1991). Learning goals, and progress feedback during reading comprehension instruction. Journal of Reading Behavior, 23, 351–364.

Schunk, D. H., & Swartz, C. W. (1993). Goals and progress feedback: Effects on self-efficacy and writing achievement. Contemporary Educational Psychology, 18, 337–354.

Wei, J., Wang, X., Schuurmans, D., Bosma, M., Xia, F., Chi, E., ... & Zhou, D. (2022). Chain-of-thought prompting elicits reasoning in large language models. Advances in neural information processing systems, 35, 24824-24837.

Zaraki, A., Khamassi, M., Wood, L. J., Lakatos, G., Tzafestas, C., Amirabdollahian, F., ... & Dautenhahn, K. (2020). A novel reinforcement-based paradigm for children to teach the humanoid kaspar robot. International Journal of Social Robotics, 12, 709-720.

Zawacki-Richter, O., Marín, V. I., Bond, M., & Gouverneur, F. (2019). Systematic review of research on artificial intelligence applications in higher education -where are the educators? International Journal of Educational Technology in Higher Education,16(1), 39. https://doi.org/10.1186/s41239-019-0171-0