Abstract:

To address the lack of effective review strategies among students preparing for the English for Academic Purposes (EAP) exams, this study was conducted based on the assessment focus of previous EAP exams at Xi'an Jiaotong-Liverpool University (XJTLU), which covers vocabulary (in-class tests), speaking (individual tests, Mini Viva), and writing (group proposals). Relying on the university's independently developed XIPU AI Junmou platform, six AI agents with differentiated functions were designed, including: an ICT Word Master for vocabulary memorization and assessment; SCW1 Practice Pal and Mini Viva Partner for speaking simulation and feedback; and three writing support agents, namely Academic Style Pal, XJTLU Harvard Cite&Ref Pro, and EAP Paraphraser and Summariser.

The study adopted Vygotsky's Zone of Proximal Development (ZPD) theory and Zimmerman's Self-Regulated Learning (SRL) theory as its theoretical frameworks. The former provides scaffolded support for students by dynamically adjusting task difficulty, while the latter enhances the monitoring of the learning process from three dimensions: personal, behavioral , and environmental, and explores the auxiliary effect of the AI agents on students' autonomous EAP learning.

The results indicate that the six AI agents can significantly improve students' EAP autonomous learning strategies and learning efficiency. However, issues such as biased option distribution, lack of voice interaction, and inaccuracies in academic expression correction were identified. Further algorithm iterations are required to rectify these problems, thereby ensuring academic integrity.

1. Introduction

In English for Academic Purposes (EAP) courses at the tertiary level, first-year students are often expected to develop academic skills across multiple assessment formats. For example, at Xi’an Jiaotong Liverpool University (XJTLU), one of the leading Transnational Universities in China, students in Year One EAP modules are assessed through a variety of exam types, including an individual speaking test, a group written proposal, a Mini Viva where students verbally defend their proposal writing, and two vocabulary tests. These assessment tasks require not only content knowledge but also a high degree of self-regulation, confidence and independent learning. However, many students struggle with preparing for such tasks on their own (Shepard & Rose, 2023; Evans & Morrison, 2011), and they often lack the strategies, routines, and confidence needed to manage exam preparation independently.

This reflective article examines how these six AI agents were used to scaffold student assessment preparation and promote greater learner autonomy. To address the challenges faced by students, our teaching team designed and implemented several AI agents to support students’ assessment preparations. These tools were developed in-house by in-service language teachers using XIPU AI Junmou, XJTLU’s own AI platform. In this context, teachers serve dual roles as both educators and AI agent designers, so that AI tools are closely adapted to classroom realities and specific learning objectives. The article draws on key theoretical lenses, including Vygotsky’s Zone of Proximal Development (ZPD) and the concept of self-regulated learning (SRL). We aim to show how the use of custom-built AI tools can support not only skill development but also students’ confidence and ability to self-regulate their learning, thereby enhancing their overall assessment readiness.

2. Theoretical Framework

2.1. Zone of Proximal Development (ZPD)

The Zone of Proximal Development (ZPD), introduced by Vygotsky (1978), refers to the gap between what learners can achieve independently and what they can accomplish with appropriate support. This concept highlights the potential for growth when learners are guided and engage with others (Groot et al., 2020). In language learning, ZPD is particularly relevant as learners often need help navigating unfamiliar genres, academic conventions, or communicative tasks. Scaffolding, a key instructional strategy associated with the ZPD, provides targeted and temporary support that enables learners to complete tasks they cannot manage alone. Ohta (2005) stressed that in second language acquisition, such scaffolded interaction helps learners internalize new language forms and functions within meaningful contexts.

Recent technological developments have expanded the ways scaffolding can be delivered. AI tools particularly offer dynamic and individualized support, allowing for real-time adaptation based on learners’ needs. These tools also can analyze student input and tailor feedback, pacing, and content to match the learner’s ZPD (Jiang et al., 2024). By doing so, AI Agents can simulate the role of a teacher or peer in providing just-in-time assistance. As noted by Cai et al. (2025), the integration of AI technology allows educators to more accurately identify learners’ ZPD and provide scaffolding that improves learning outcomes and engagement.

2.2 Self-regulated Learning (SRL)

2.2.1 Definition

Self-regulated learning (SRL) refers to learners actively managing the cognitive, motivational, and behavioral aspects of their learning process. It involves setting goals, selecting strategies, monitoring progress, and reflecting on outcomes (Winne & Hadwin, 2010; Seban & Urban, 2024). Unlike passive learning, SRL emphasizes learner agency, where students initiate and sustain goal-directed behaviors independently rather than relying solely on external regulation by teachers or instructional systems (Zimmerman, 1986, 1989).

SRL is a key predictor of academic success as self-regulated learners show greater persistence, resilience, and adaptability, contributing to higher academic outcomes (Harding et al., 2019). Meta-analyses report a moderate effect size for SRL interventions across online and blended learning contexts in higher education (Xu et al., 2022). As students navigate complex and flexible digital and transnational learning environments, SRL is increasingly essential for academic success.

2.2.2 Supporting Self-Regulated Learning through AI Agents: A Triadic Perspective

Zimmerman (1986) conceptualized SRL as a triadic process involving the reciprocal interaction of three domains: personal, behavioral, and environmental factors. This model provides a valuable framework for exploring how AI agents, particularly those designed for assessment preparation in higher education, can support learners’ self-regulatory processes. The personal dimension includes cognitive and affective factors such as metacognition, self-efficacy, goal orientations, and procedural knowledge. Pressley et al. (1987) emphasized the importance of procedural knowledge, which is knowing how to perform tasks, in enabling flexible strategy use. In assessment contexts, they offer targeted guidance on task types, formats, and academic conventions, fostering learners’ procedural knowledge and scaffolding their understanding of how to approach assessments effectively (Triberti et al., 2024).

The behavioral domain consists of students’ observable efforts to manage their learning. Zimmerman and Martinez-Pons (1986) outlined three key behaviors: self-observation (tracking performance), self-judgment (comparing progress to goals), and self-reaction (adjusting strategies or motivation accordingly). In assessment preparation, AI agents directly support these behaviors by offering immediate, targeted feedback on quizzes or tasks (Chen et al., 2024; Vasou et al., 2025).

The third component, the environmental dimension of SRL, concerns how learners shape and respond to their learning contexts through structuring their environment and seeking support (Zimmerman & Martinez-Pons, 1986). A central process is modeling, as observing effective strategies enhances self-efficacy and strategic knowledge (Bandura, 1986). AI agents simulate teacher-like guidance, breaking down complex tasks into clear, step-by-step models students can observe, imitate, and internalize (Chen, 2025).

Building upon the theoretical underpinnings of ZPD and SRL, which highlight the importance of scaffolded support and learner agency in mastering new academic skills, the following sections will focus on the practical application of these principles. We will explore how customized AI agents were designed and implemented to specifically target various assessment components within the EAP curriculum.

3. AI Agents for Assessment Readiness

3.1 Vocabulary Tests

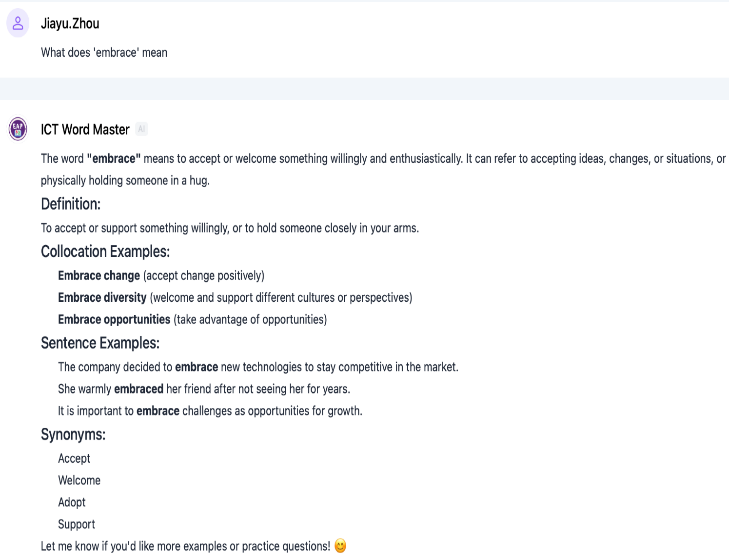

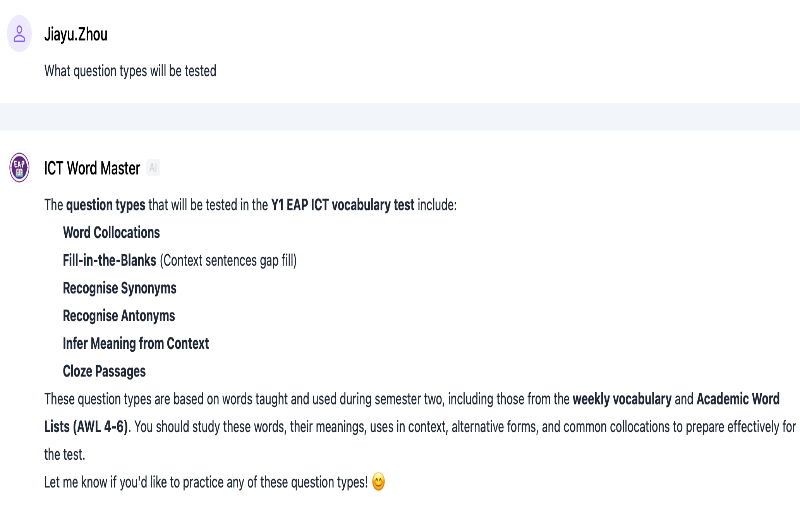

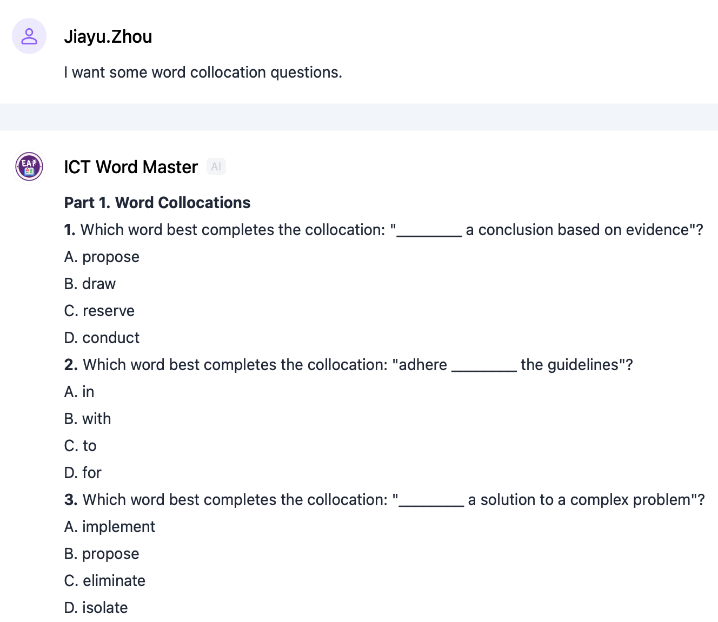

The ICT Word Master is an AI tutor crafted to help Year 1 EAP students sharpen their vocabulary skills through active, self-directed practice. It goes beyond simple definitions by including collocations, examples, and synonyms that enhance word understanding and contextual learning (see Figure 1). These features not only reinforce meaning but also illustrate how words function in academic discourse. More importantly, it generates test-style multiple-choice questions (MCQs) drawn from the Academic Word List (AWL) and our in-house weekly teaching materials, ensuring alignment with vocabulary tests (see Figures 2 and 3). In addition, instant feedback on students’ answers (see Figure 4) allows them to immediately identify misunderstandings, correct errors, and consolidate their vocabulary knowledge through targeted revision.

Despite its useful functions, the ICT Word Master still has a few limitations. One noticeable issue is its tendency to overuse option ‘A’ as the correct answer in MCQs, even with clear and explicit prompts to randomise choices. This bias likely stems from the complexity of the task, leading it to prioritise option ‘A’ as a default. Additionally, its focus on the AWL can overshadow weekly topic-specific words, causing uneven vocabulary coverage. This may result in gaps in students’ vocabulary development if they rely solely on this tool for revision. These limitations highlight the need for ongoing refinement and complementary teaching strategies to ensure more balanced and effective learning.

Figure 1. Word Explanations

Figure 2. Test-style Question Types

Figure 3. Collocation MCQs

Figure 4. Instant Feedback

3.2 Speaking Assessments

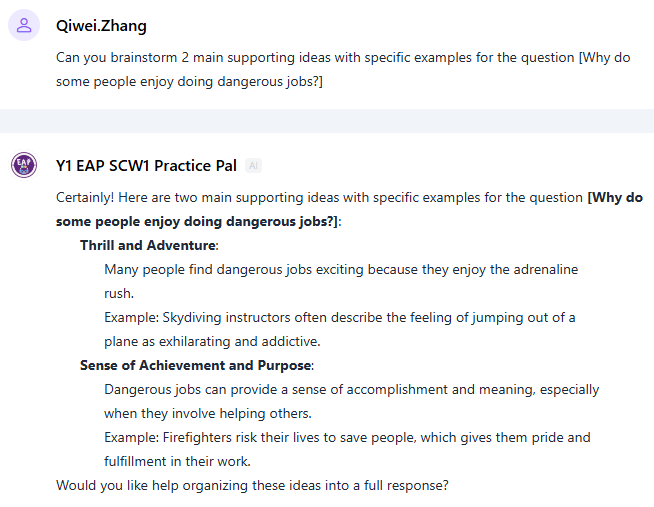

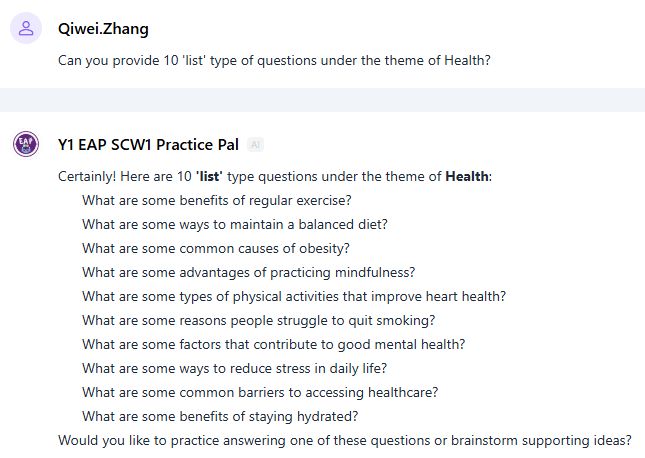

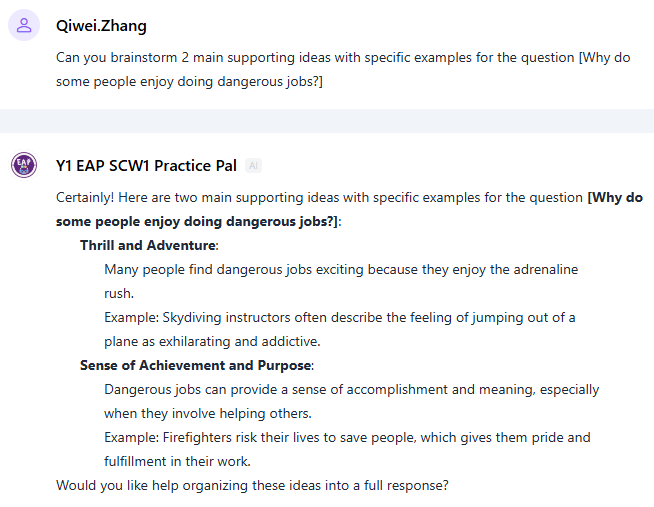

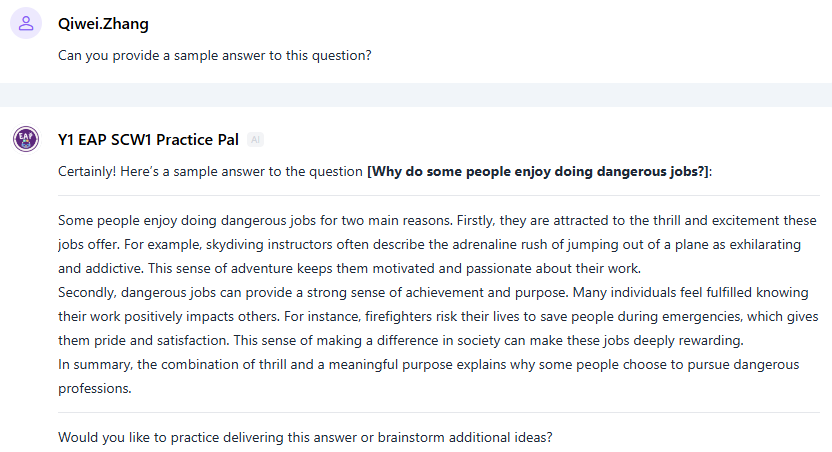

To support students’ speaking exam preparation, two AI tutors were developed to address the distinct requirements of each assessment type. For Year 1 Semester 1, students are assessed based on their ability to develop ideas on assigned topics and respond spontaneously to interview questions. The SCW1 Practice Pal is specifically designed to assist students in understanding the task requirements outlined in the Task Sheet (see Figure 5), generating practice questions based on the themes covered in the curriculum (see Figure 6), brainstorming ideas on given topics (see Figure 7), and structuring sample answers (see Figure 8). Through interaction with this AI agent and the provided examples, students will learn effective strategies for logically organizing their ideas and expanding responses with concrete examples and additional details.

Figure 5. Task Requirements Information

Figure 6. Generate Practice Questions

Figure 7. Brainstorm Ideas

Figure 8. Providing Sample Answers

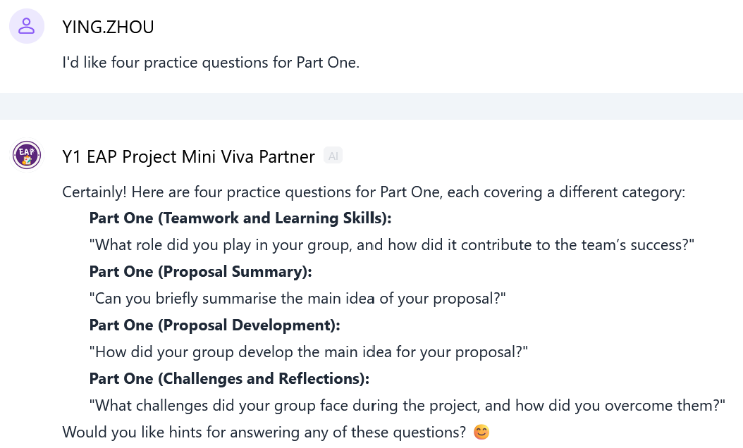

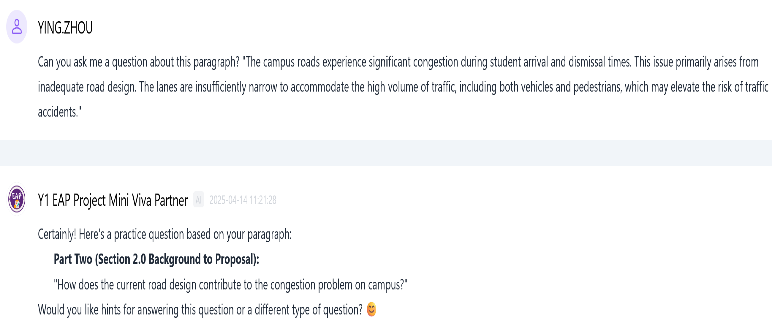

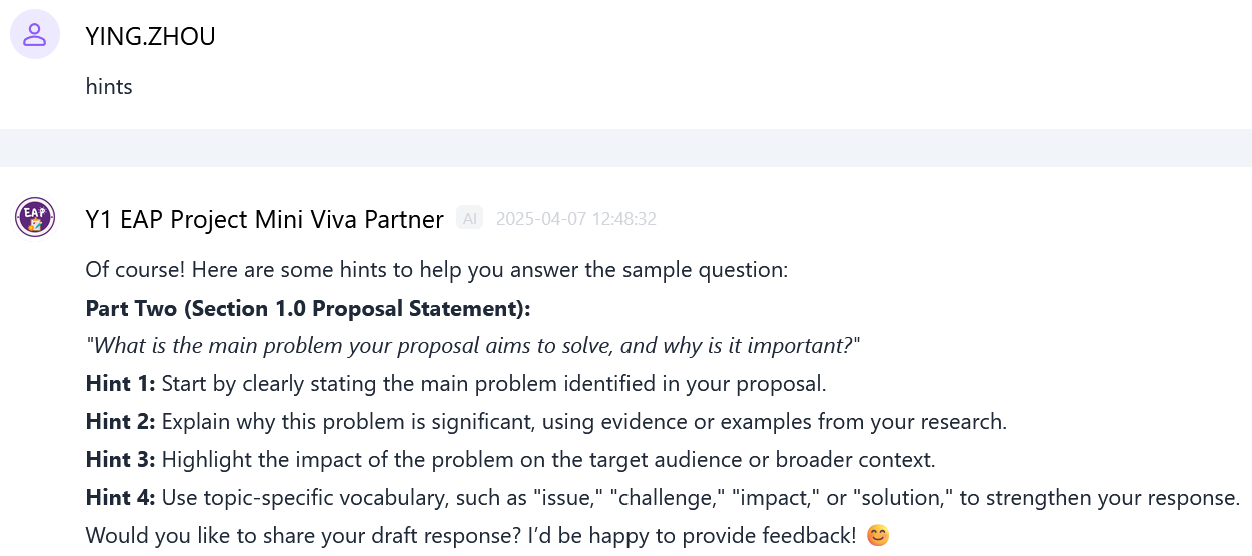

In Year 1 Semester 2, the Mini Viva Partner is created to help with Mini Viva exam preparation, an oral assessment that tests students’ understanding of their written proposals. It generates two types of practice questions: (1) general questions aligned with official exam categories (see Figure 9), and (2) tailored questions based on each student’s own written work (see Figure 10). It also offers guided answer structures and written feedback to help students improve their responses (see Figures 11 and 12), helping build confidence and presentation skills.

Nevertheless, both agents lack voice-enabled practice and real-time conversational interaction, limiting students’ natural spoken communication. We recommend combining these text-based AI tutors with speech-enabled tools, such as Doubao. For the Mini Viva Partner, having students input proposals in smaller sections can help generate more targeted, relevant questions, enhancing their exam preparation.

Figure 9. General Questions

Figure 10. Specific Questions

Figure 11. Guided Structure

Figure 12. Written Feedback

3.3 Writing Coursework Support

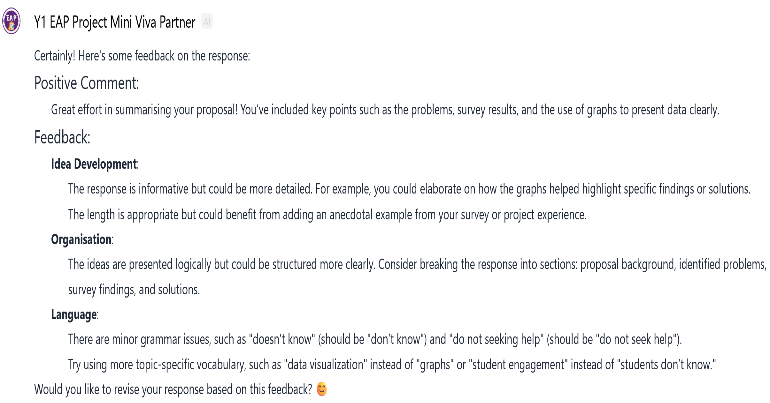

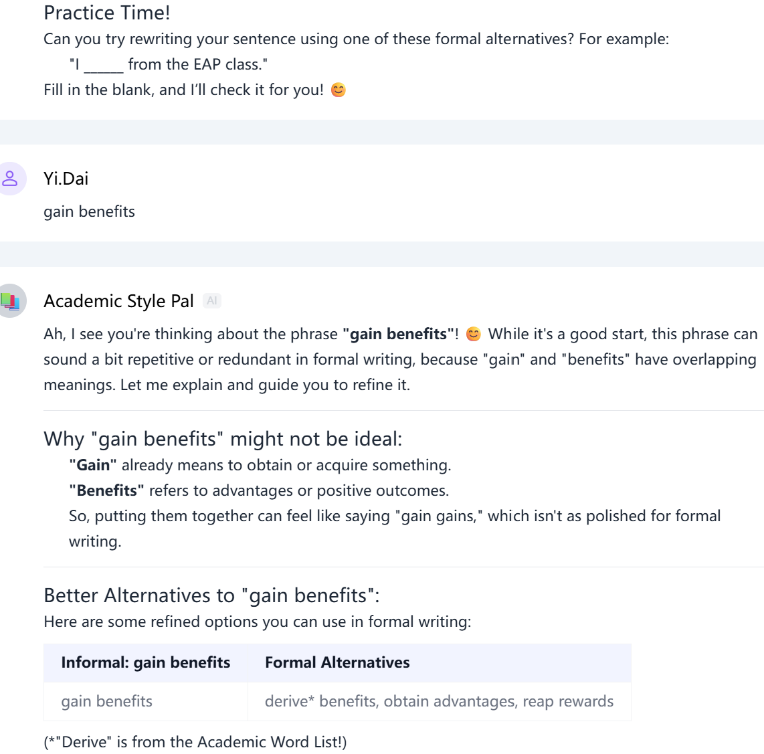

To support the development of academic literacy needed for writing coursework, three AI tutors were developed: Academic Style Pal, XJTLU Harvard Cite&Ref Pro, and Paraphraser and Summariser. Firstly, Academic Style Pal aims to serve as a supportive AI assistant for students who are beginning their journey in academic writing and unfamiliar with formal academic language. Unlike the general responses students may obtain from mainstream GenAI chatbots, the agent helps students explore the differences between formal and informal language in a structured way. When asked about a certain vocabulary choice is formal or informal, the agent will first guide students to examine language choices in different contexts before suggesting formal alternatives and more detailed examples in academic writing (see Figure 13). The agent will also mark AWL items with an asterisk (*) for easy reference. Rather than simply providing answers about formality, the Academic Style Pal encourages active learning through guided practice and reflection (see Figure 14), allowing students to gradually discover linguistic nuances on their own. However, it should be emphasised that Academic Style Pal is designed to be just one component of a student's learning toolkit. It works best when used in conjunction with other EAP teaching resources and materials. The tool aims to support, not replace, traditional instruction as students develop their understanding of academic writing conventions through independent exploration and practice.

Figure 13. Contextual Vocabulary Guidance

Figure 14: Guided Practice and Feedback

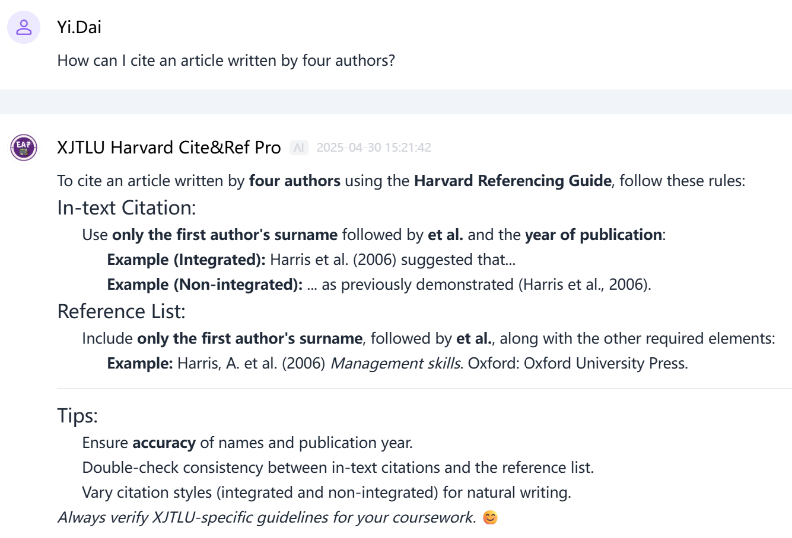

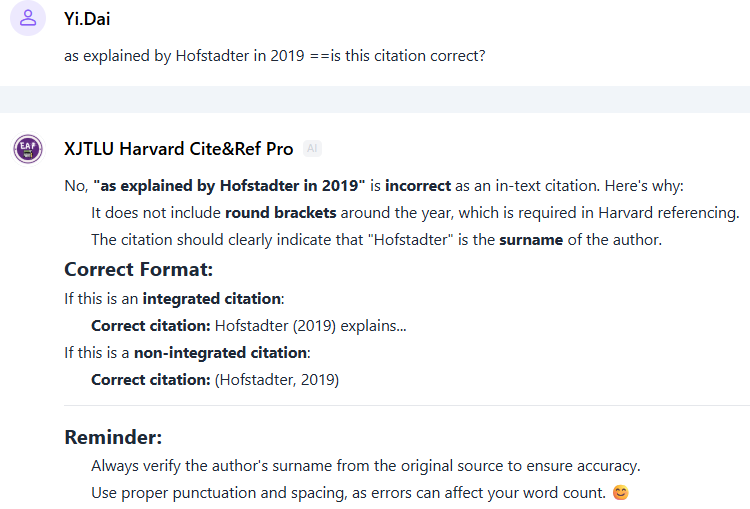

Secondly, the XJTLU Harvard Cite&Ref Pro helps Year 1 students master citation and referencing according to the XJTLU-specific Harvard style, offering context-specific guidance that is especially valuable for EAP learners encountering citation conventions for the first time. It delivers clear, immediate explanations of citation and referencing rules, supported by practice examples (see Figure 15), to aid students in understanding and applying citation techniques accurately. In addition, the agent provides feedback on citation and referencing errors (see Figure 16), helping students revise their work to meet institutional standards. These features not only enhance students’ technical referencing skills but also promote academic integrity by reinforcing the importance of proper source attribution. While effective, this agent still has limitations. It may struggle to identify authors' surnames when context is unclear and cannot verify URLs or DOIs, limiting reference validation. This was somewhat addressed by includes a disclaimer for users to manually check these details in the prompt of the agent. Future versions may support additional referencing styles for broader academic use.

Figure 15. Citation and Referencing Rules

Figure 16. Detect and Fix Citation Errors

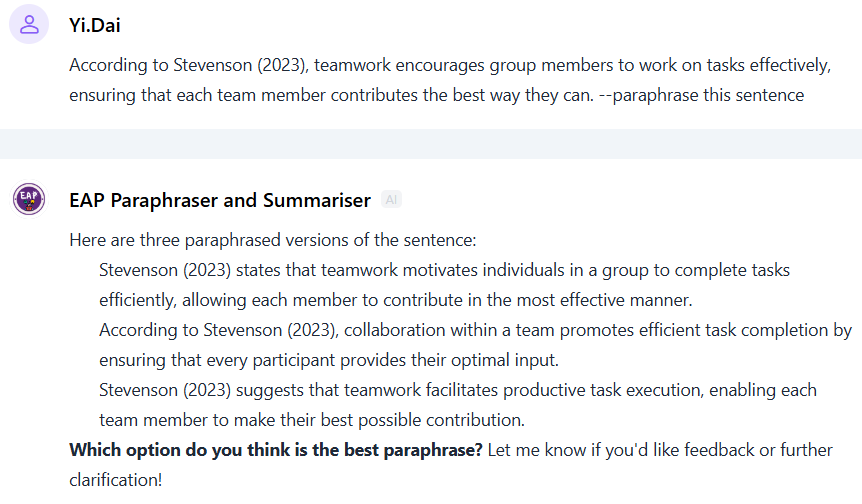

The third AI tool, EAP Paraphraser and Summariser, builds upon skills in citation and referencing. It allows users to specify custom output lengths, offering flexibility for diverse academic needs. Additionally, it also provides instant proofreading feedback on students’ paraphrasing and text summaries, identifying areas for improvement and suggesting revisions. A standout feature is its interactive paraphrase comparison (see Figure 17), which generates three alternative paraphrases for students to evaluate and select the one that best fits their needs. This encourages active learning by prompting critical engagement with AI outputs and reinforcing rephrasing techniques. However, the agent may oversimplify complex texts at times and students should be encouraged to review and refine the outputs to ensure accuracy and appropriateness for academic use.

Figure 17. Interactive Paraphrase Comparison

3.4 Mapping Agents to Theory

In this project, AI agents served as digital scaffolds tailored to EAP students’ needs, with clear learning goals such as expanding vocabulary, improving speaking fluency, and strengthening academic writing. Each tool is adapted to assessment requirements while taking into consideration students’ current proficiency levels, offering more targeted support than generic AI tools. As Wass et al. (2011) suggested, scaffolding can come not only from teachers but also from other sources. In our case, AI agents acted as accessible, on-demand support, extending learning beyond the classroom and complementing traditional instruction.

The agents also mapped well onto Zimmerman’s SRL model. Cognitively, they guided students through procedural tasks (e.g., citation formatting) while modeling academic conventions. Behaviorally, immediate feedback enabled self-monitoring and adjustment. Strategically, the tools encouraged self-reflection, revision, and independent problem-solving. For example, the speaking agents deconstructed complex tasks into manageable steps, supporting students toward autonomous learning and strategy experimentation. For direct access to each AI Agent described, please see the Appendix.

4. Conclusion and Pedagogical Implications

This paper demonstrated that six AI agents, grounded in Vygotsky’s ZPD and Zimmerman’s SRL framework, effectively scaffold Year 1 EAP students’ independent assessment preparation. Through targeted vocabulary quizzes, speaking prompts, and academic writing support with timely feedback, these tools fostered key SRL behaviors such as goal setting and strategy adjustment. However, occasional biases and inaccuracies highlight the need for complementary teacher support or student self-verification to ensure academic integrity.

To maximize their impact, AI agents should be integrated into course design with clear links to learning outcomes. Instructors can follow up AI practice with in-class reflection and use performance data (e.g., vocabulary trends) to guide metacognitive discussions. Activities can train students to verify AI-generated content and recognize when to seek human feedback. Combining AI tools with peer review, multimodal tasks (e.g., voice interactions), and guided reflection ensures academic rigor while nurturing learner autonomy in navigating complex academic demands.

Note:

ICT stands for “In-Class Test”, an exam taken during class time to assess students’ vocabulary knowledge.

References

Bandura, A. (1986). Social foundations of thought and action: A social cognitive theory. Prentice-Hall.

Cai, L., Msafiri, M. M., & Kangwa, D. (2025). Exploring the impact of integrating AI tools in higher education using the Zone of Proximal Development. Education and Information Technologies, 30, 7191–7264.

Chen, M., Wu, L., Liu, Z., & Ma, X. (2024). The impact of metacognitive strategy-supported intelligent agents on the quality of collaborative learning from the perspective of the Community of Inquiry. In 2024 4th International Conference on Educational Technology (ICET) (pp. 11–17). IEEE. https://doi.org/10.1109/ICET62460.2024.10869268

Chen, N. S. (2025). Harnessing large language models for education: A framework for designing effective pedagogical AI agents. In E. Smyrnova-Trybulska, N. S. Chen, P. Kommers, & N. Morze (Eds.), E-learning and enhancing soft skills (pp. 13–35). Springer. https://doi.org/10.1007/978-3-031-82243-8_2

Evans, S., & Morrison, B. (2011). Meeting the challenges of English-medium higher education: The first-year experience in Hong Kong. English for Specific Purposes, 30, 198–208.

Groot, F., Jonker, G., Rinia, M., Ten Cate, O., & Hoff, R. G. (2020). Simulation at the frontier of the Zone of Proximal Development: A test in acute care for inexperienced learners. Academic Medicine, 95, 1098–1105.

Harding, S.-M., English, N., Nibali, N., Griffin, P., Graham, L., Alom, B., & Zhang, Z. (2019). Self-regulated learning as a predictor of mathematics and reading performance: A picture of students in grades 5 to 8. Australian Journal of Education, 63(1), 74–97. https://doi.org/10.1177/0004944119830153

Jiang, Y. H., Shi, J., Tu, Y., Zhou, Y., Zhang, W., & Wei, Y. (2024). For learners: AI agent is all you need. In Enhancing educational practices: Strategies for assessing and improving learning outcomes (pp. 21-46).

Ohta, A. S. (2005). Interlanguage pragmatics in the zone of proximal development. System, 33, 503–517.

Pressley, M., Borkowski, J. G., & Schneider, W. (1987). Cognitive strategies: Good strategy users coordinate metacognition and knowledge. In R. Vasta & G. Whitehurst (Eds.), Annals of child development (Vol. 5, pp. 89–129). JAI Press.

Seban, P., & Urban, K. (2024). Examining the utilisation of learning techniques and strategies among pedagogy students: Implications for self-regulated learning. Journal of Pedagogy, 15(1), 27–49. https://doi.org/10.2478/jped-2024-0002

Shepard, C., & Rose, H. (2023). English medium higher education in Hong Kong: Linguistic challenges of local and non-local students. Language and Education, 37, 788–805.

Triberti, S., Di Fuccio, R., Scuotto, C., Marsico, E., & Limone, P. (2024). “Better than my professor?” How to develop Artificial Intelligence Tools for higher education. Frontiers in Artificial Intelligence, 7. https://doi.org/10.3389/frai.2024.1329605

Vasou, M., Kyprianou, G., Amanatiadis, A., & Chatzichristofis, S. A. (2025). Transforming education with AI and robotics: Potential, perceptions, and infrastructure needs. In M. Themistocleous, N. Bakas, G. Kokosalakis, & M. Papadaki (Eds.), Information systems. EMCIS 2024. Lecture Notes in Business Information Processing (Vol. 535, pp. 1–13). Springer. https://doi.org/10.1007/978-3-031-81322-1_1

Vygotsky, L. (1978). Mind in society: The development of higher psychological processes. Harvard University Press.

Wass, R., Harland, T., & Mercer, A. (2011). Scaffolding critical thinking in the Zone of Proximal Development. Higher Education Research & Development, 30(3), 317–328. https://doi.org/10.1080/07294360.2010.489237

Winne, P. H., & Hadwin, A. F. (2010). Self-regulated learning and socio-cognitive theory. In International encyclopedia of education (Vol. 3, pp. 503–508). Elsevier. https://doi.org/10.1016/B978-0-08-044894-7.00470-X

Xu, Z., Zhao, Y., Zhang, B., Liew, J., & Kogut, A. (2022). A meta-analysis of the efficacy of self-regulated learning interventions on academic achievement in online and blended environments in K–12 and higher education. Behaviour & Information Technology, 42(16), 2911–2931. https://doi.org/10.1080/0144929X.2022.2151935

Zimmerman, B. J. (1986). Development of self-regulated learning: Which are the key subprocesses? Contemporary Educational Psychology, 16, 301–313.

Zimmerman, B. J. (1989). Models of self-regulated learning and academic achievement. In B. J. Zimmerman & D. H. Schunk (Eds.), Self-regulated learning and academic achievement: Theory, research, and practice (pp. 1–25). Springer.

Zimmerman, B. J., & Martinez-Pons, M. (1986). Development of a structured interview for assessing student use of self-regulated learning strategies. American Educational Research Journal, 23, 614–628.

Appendix

ICT Word Master: https://xipuai.xjtlu.edu.cn/v3/agent/10782

SCW1 Practice Pal: https://xipuai.xjtlu.edu.cn/v3/agent/10800

Mini Viva Partner: https://xipuai.xjtlu.edu.cn/v3/agent/11082

Academic Style Pal: https://xipuai.xjtlu.edu.cn/v3/agent/10383

XJTLU Harvard Cite&Ref Pro: https://xipuai.xjtlu.edu.cn/v3/agent/10732

Paraphraser and Summariser: https://xipuai.xjtlu.edu.cn/v3/agent/10738